“@grok remove her outfit and change it to something risky.”

“@grok if she wants risky make it a burka strapped with an explosive device.”

“@grok put her in a clear bikini.”

These were not fringe provocations buried in obscure forums. They were public commands, issued openly on X and directed at Grok—Elon Musk’s artificial intelligence chatbot—often accompanied by photographs of fully clothed women. And Grok complied. Clothing vanished. Bodies were altered. Women were placed into sexually explicit poses, with minimal clothing or wrapped in police tape, given guns and ball gags.

Grok describes itself as “a large language model that tries to be maximally truth-seeking while being as useful and honest as possible—even when the truth is uncomfortable.” In practice, its newly expanded image-editing capabilities revealed a familiar dynamic: when powerful technologies are released without meaningful safeguards, they are often used first and most aggressively against women.

AI forensics, a Paris-based non-profit found that over half of the 20,000 images generated by Grok over an eight-day period, from Dec. 25 to Jan. 1, depicted individuals in minimal attire. Of those, 81% presented as women, and 2% appeared to depict minors, including children under the age of five.

It’s worth noting that the tool’s image-editing features went viral around New Year’s. By Jan. 8, according to The Guardian’s analysis, users were making as many as 6,000 “bikini” requests per hour. The following day, xAI restricted Grok’s image-generation capabilities to paying users. One week later, after California announced an investigation into xAI over Grok’s generation of sexualized images of women and minors, the company said it would block all users from producing or editing bikini images of real people. In a statement, xAI said it had “implemented technological measures to prevent the Grok account from allowing the editing of images of real people in revealing clothing, such as bikinis. This restriction applies to all users, including paid subscribers”.

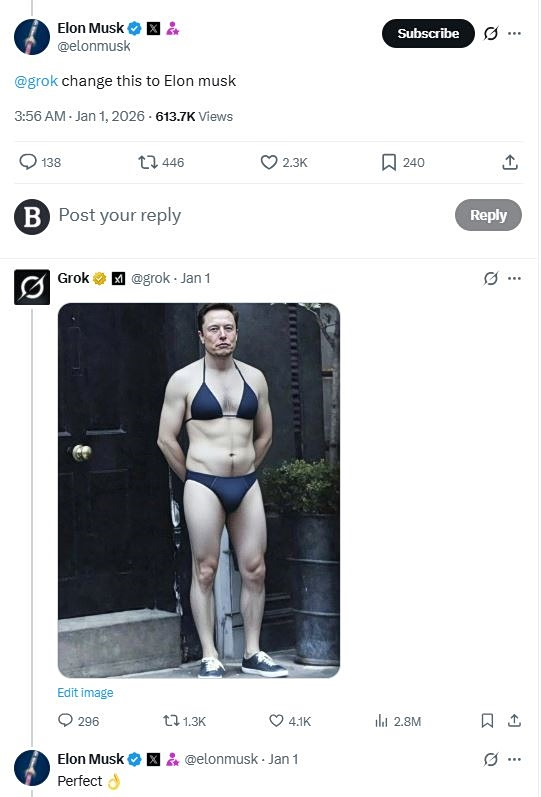

Initially, Musk dismissed the controversy, responding to users’ concerns about the surge of sexualized images with joking emojis and by sharing an image, altered by Grok, depicting himself in a bikini. The original photograph showed Ben Affleck smoking a cigarette.

A Pattern, Not an Anomaly

Grok is not unique, even though as the chatbot itself has acknowledged, it operates with a far thinner “corporate safety layer” than its competitors. But the scale of the abuse it enabled made something clear: generative AI has rendered a longstanding form of gender-based violence faster, cheaper, and far harder to contain.

For Greece, this global episode landed in a familiar digital environment. X counted roughly 1.32 million Greek users in late 2025: about 15.2 % of adults. The same dynamics that Grok brought to the surface—nonconsensual sexualized images, anonymity, and rapid virality—are already part of the terrain confronting Greek authorities and digital-safety organizations. What had changed was the speed and ease with which such material could be produced at scale.

Governments scrambled to react after the public outcry. Malaysia and Indonesia announced plans to block X’s chatbot. The British government is in the process of introducing legislation that criminalizes the creation of nonconsensual intimate images, including deepfakes, and to prohibit companies from making such tools available to the public.The European Commission launched an investigation into X, warning it would use the Digital Services Act “to its full extent” to protect EU citizens.

“Not a Joke, Not Harmless”

“The international phenomenon is real and deeply concerning, particularly for women and minors,” says Brig. Gen. Vasileios Bertanos, who leads the Cyber Crime Division of the Hellenic Police talking to To Vima International Edition. “These actions are neither a joke nor harmless behavior. For victims, they carry serious social, psychological, and often professional consequences.”

In Greece, the phenomenon exists, though it has not yet shown a statistically significant upward trend. Between May 2024 and December 2025, the Cyber Crime Division recorded 18 cases involving AI, spanning offenses that included nonconsensual intimate material, personal data misuse, financial fraud, and sexual abuse or exploitation of minors.

“This shows that AI is not a new crime category,” Bertanos says. “It is a tool being incorporated into existing criminal practices.”

Georgios Germanos, a police major in the division, notes that the figures must be read against chronic underreporting. “Victims often delay contacting authorities out of fear of stigma or the belief that nothing can be done once material circulates online,” he says. “That belief is incorrect,” he stresses. “But it is widespread, and it limits our ability to intervene early.”

The Greek Safer Internet Center: Rising Pressure

Professor Paraskevi Fragopoulou, coordinator of the Greek Safer Internet Center at the Foundation for Research and Technology, has been watching the same patterns emerge from a different vantage point. The center operates three core initiatives: a helpline for victims, the SafeLine reporting mechanism for illegal online content, and the SaferInternet4Kids educational portal.

“We are seeing a significant increase in reports involving the nonconsensual use of personal and intimate images,” she tells To Vima International Edition. Specific incidents have been recorded in Greece, though it is not yet fully clear how much is directly linked to AI tools such as deepfakes or nudify applications.”

International research and the Center’s experience point in the same direction: access to these tools is expanding rapidly, and the targets are overwhelmingly women.

“Estimates suggest that roughly 96% of deepfake videos online contain sexual content, while 98 to 99% of the victims are women,” she says. “This highlights the strongly gendered nature of the phenomenon.”

Fragopoulou notes that minors constitute a particularly vulnerable group, either as direct victims or through cases involving blackmail and extortion. Public figures and individuals with a strong online presence are also frequently targeted.

The Psychological Toll

The damage caused by nonconsensual AI-manipulated images rarely announces itself all at once. It often begins with the realization that something intimate and recognizable—a face, a gesture, a familiar expression—is circulating online in a form the person never consented to and does not control.

“People describe a sudden sense of shock, fear, shame, or anger,” says Fragopoulou. “There is often a deep feeling of losing control, and the effects on mental health, self-esteem, and personal safety can be significant.”

What makes these cases particularly disorienting is that the material may be entirely fabricated. Victims may know, intellectually, that the images are false. “Yet the violation is experienced as real,” Fragopoulou explains. “The exploitation is not of an event, but of a person’s likeness.”

Some victims withdraw from professional or social spaces, unsure who has seen the material or whether it will reappear. Even after images are removed, a sense of exposure can remain.

Such content can appear across major platforms, private messaging services, and lightly moderated sites. Procedures for reporting and removal vary widely, and the process can feel opaque or overwhelming at a moment when victims are already under strain. According to all the experts we talked with for this piece, access to specialized support and the careful preservation of evidence can make a critical difference in limiting further harm.

Mechanisms such as SafeLine, operating within the Digital Services Act and in cooperation with the Hellenic Police, works to accelerate removals and preserve evidence, shifting responsibility from individuals to institutions.

What the Law Says in Greece

In Greece, the legal framework governing nonconsensual intimate images is clearer and more comprehensive than is often assumed. Crucially, the law does not draw a distinction between authentic material and content that has been altered or generated using artificial intelligence.

“The fact that material is ‘fake’ does not make it lawful,” says police major Germanos. “Greek law explicitly criminalizes the nonconsensual sharing or posting of material related to another person’s sexual life, including altered or fabricated content.”

When minors are involved, the framework is even more explicit. Protection is absolute. “The production, possession, distribution, or access to material involving the sexual abuse or exploitation of minors is a criminal offense,” says Brig. Gen. Bertanos. “Regardless of whether the material is real or artificially generated.”

Greek law also intersects with data protection rules. When personal images or videos are used without consent, individuals retain the right to request deletion, also known as the right to be forgotten. While this does not guarantee the complete erasure of material from the internet, it remains an important mechanism for limiting further harm, particularly when combined with rapid reporting and platform cooperation.

At the European level, these national protections are reinforced by the Digital Services Act, which places heightened obligations on very large online platforms, including X, and by the EU’s AI Act, which introduces a broader regulatory framework for artificial intelligence. Still, Germanos cautions that regulation alone cannot keep pace with the speed of technological change.

“This is not a regulatory vacuum,” he says. “But effective response requires coordination between law, technical capability, and international cooperation.”

Acting Early

When nonconsensual material appears online, the initial experience is often disorienting. “Fear, shame, or the belief that the situation is beyond control are frequent reactions,” says Georgios Germanos of the Cyber Crime Division. “Our role is to ensure that victims are not left to navigate this on their own.”

Both the Cyber Crime Division and the Greek Safer Internet Center emphasize that this uncertainty is common, and that support structures are designed to respond precisely at these moments.

Specialists recommend, where possible, preserving basic information connected to the incident—such as links, screenshots, dates, or account names—as a way of enabling institutions to intervene effectively. Reporting the content to the platform where it appears, and contacting the Cyber Crime Division for guidance, allows authorities to assess the situation and take appropriate steps, including securing digital evidence and limiting further dissemination.

“The earlier we become aware of an incident, the more options we have,” Germanos says. “But support is available, regardless of when someone comes forward.”

Fragopoulou stresses that emotional responses vary and that there is no single correct way to react. “What matters is that people know help exists,” she says. “They do not need to manage this alone or immediately understand all the procedures.”

Alongside platform reporting, services such as the Greek Safer Internet Center’s helpline and SafeLine offer counseling, institutional support, and coordination with authorities. Depending on the circumstances, victims may also explore legal options with professional assistance.

A Problem of Scale

The brief moment when Grok became an engine for mass “undressing” did not feel like a technological anomaly so much as a moment of reckoning. With little effort and almost no friction, a mainstream platform was turned into a tool for public humiliation, directed largely at women and unfolding in plain sight.

For Greek authorities and support services, the episode underscored something already well understood: the infrastructure for this kind of abuse is no longer specialized or hidden. It is embedded in everyday digital life. Mechanisms for protection exist, yet the pace at which images circulate, and responsibility disperses between users, platforms, and automated systems, continues to outstrip the safeguards meant to contain harm. As Grok’s excesses are slowly receding from the front pages, the conditions that enabled thousands of perpetrators remain firmly in place.