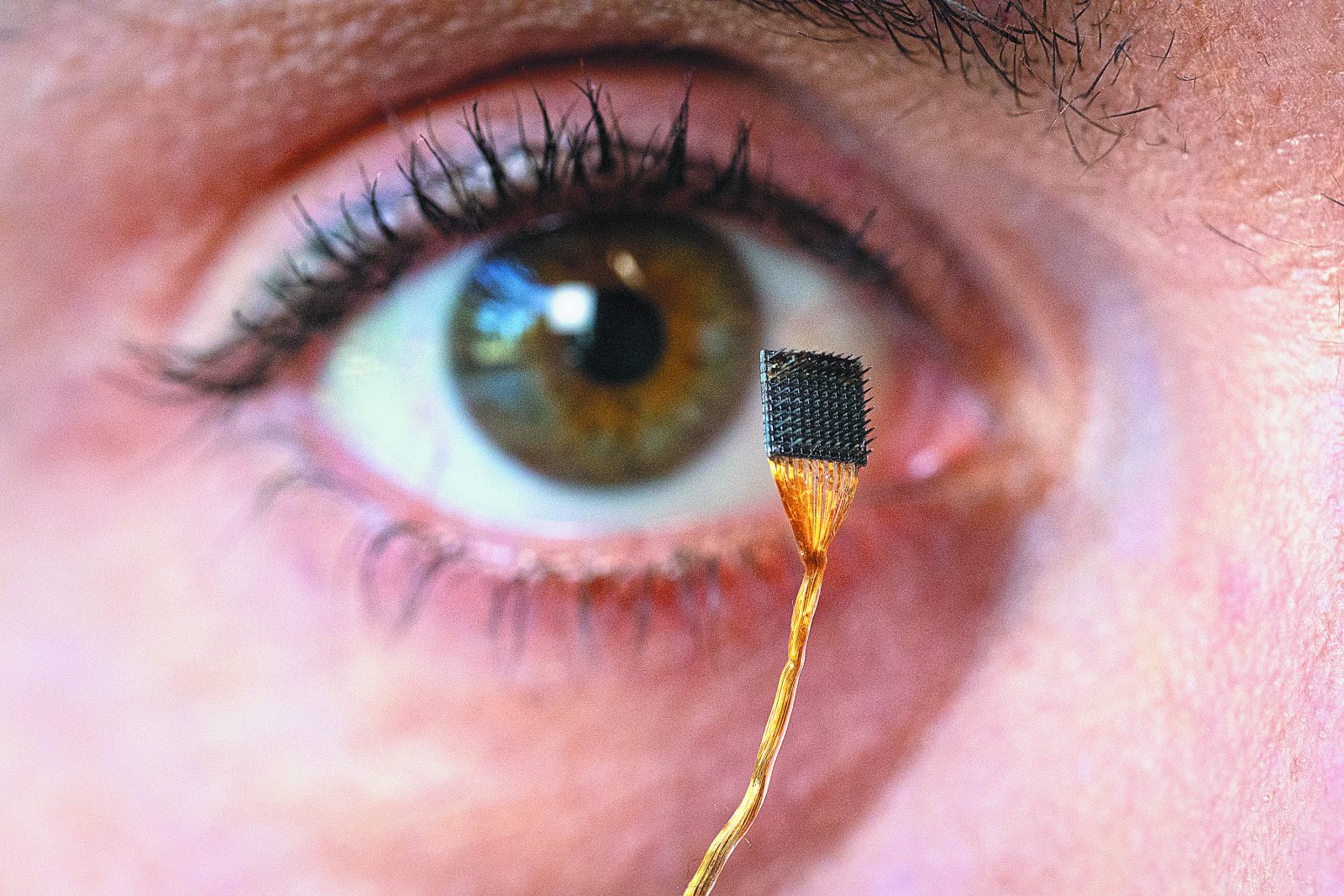

A breakthrough study at Stanford University has brought the world closer to technology that can literally give voice to unspoken thoughts. Scientists have developed a brain-computer interface (BCI) capable of translating internal speech—the words a person silently thinks—into audible language.

The system works by detecting brain signals with implanted microelectrodes and decoding them with the help of artificial intelligence. In trials, participants with severe paralysis caused by conditions such as ALS or stroke were able to generate words either by attempting to speak or by simply thinking them.

“This is the first time we’ve been able to capture brain activity when someone only thinks about speaking,” explained lead researcher Erin Kunz. Although the signals from internal speech were faint, they showed patterns similar to those of spoken words, enabling AI models to successfully decode them.

To protect users’ privacy, the team introduced a safeguard: the device activates only when the user thinks of a pre-set “mental password.” This prevents unintended thoughts from being converted into speech.

The implications are significant. BCIs already help people with mobility or communication disabilities control prosthetic limbs or type text using eye movements. But converting inner thoughts directly into spoken words could revolutionize communication for those who have lost the ability to speak.

Researchers believe that with more advanced sensors and refined AI, future devices will achieve even greater accuracy and more natural speech patterns.